For years, digital visibility was a matter of showing up.

Showing up on Google, showing up on the first page, showing up ahead of competitors.

But something has changed in a silent and profound way.

Today, more and more people don’t search for information: they ask for it. They open ChatGPT, Gemini or another AI assistant and ask a direct question, expecting a clear, contextual and, above all, useful answer. They don’t want links. They want conclusions.

That new entry point to knowledge is where large language models (LLMs) come into play. And it’s also where many brands are beginning to realise that their visibility no longer depends only on rankings, but on how artificial intelligence interprets who they are, what they do and how relevant they are.

TABLE OF CONTENTS

-

The first big shift: from competing for clicks to competing to be part of the answer

- When Google stops being just an index and starts responding

- SEO, GEO and LLMs: the same story told in a different way

What is an LLM (and why it’s not like a search engine)

A Large Language Model is an artificial intelligence model trained on massive volumes of text to understand, connect and generate natural language. But reducing it to “an AI that writes” is missing the point.

The key difference compared to a traditional search engine is not the technology, but the role it plays.

|

A search engine: |

An LLM: |

|

|

When someone asks:

“How can brands improve their visibility in AI environments?”

The LLM doesn’t return ten articles for the user to choose from. It delivers an explanation. And in that explanation it chooses which examples, approaches or brands to mention.

That’s where the real battle for visibility begins.

The first big shift: from competing for clicks to competing to be part of the answer

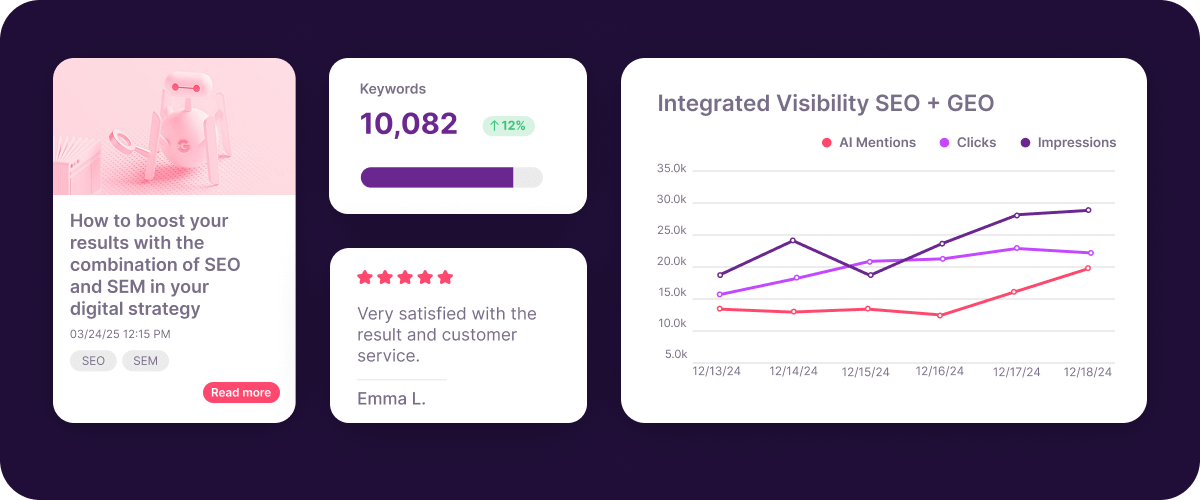

In the traditional model, visibility was measured in:

- impressions

- clicks

- sessions

With LLMs, a new scenario emerges: influence without traffic.

It is increasingly common for a user to:

- Discover a brand through an AI-generated answer

- Understand its value proposition before visiting the website

- Filter options without comparing links

In many cases, when the click finally happens, the decision is already almost made. This forces brands to shift their focus:

- less obsession with CTR

- greater attention to message clarity

- more importance placed on conceptual positioning

This is where GEO (Generative Engine Optimization) starts to make sense: optimising content so that AI understands it, trusts it and incorporates it into its answers.

When Google stops being just an index and starts responding

This shift isn’t happening only outside Google. It’s happening inside the search engine itself.

With the arrival of AI Overviews, Google has begun delivering AI-generated answers directly in the SERP, combining information from multiple sources and reducing the weight of the click.

For users, it’s convenient. For brands, it’s a warning.

The message is clear: the search engine is also adopting the logic of LLMs.

In this context, SEO stops being a purely technical race and becomes an exercise in understandability: the better content is explained, the easier it is for AI to use it as a reference.

The second big shift: structured data as the language of AI

If LLMs work through understanding, structured data becomes their best ally.

AI doesn’t “read” a website like a human. It interprets:

- attributes

- relationships

- hierarchies

- semantic coherence

This is where catalogues stop being a campaign support and become a strategic visibility asset.

A very real example

Two brands sell similar products:

- one uses generic descriptions and incomplete attributes

- the other works its catalogue with clear, structured and connected information

When AI has to recommend, compare or contextualise products, the choice is obvious. The one that is easier to understand.

In an environment where users directly ask:

“Which product is right for this specific case?”

The catalogue becomes the language AI uses to respond.

From showing products to reasoning about them

For a long time, the role of technology in marketing was relatively simple: showing products. Listing them properly, sorting them better and waiting for users to choose. With the arrival of LLMs, this approach falls short.

The next level appears when AI doesn’t just display information, but interprets and analyses it. Language models can detect performance patterns within a catalogue, understand which products perform best in specific contexts and prioritise those that make the most sense based on user intent at any given moment. It’s no longer just about what is sold, but why, when and for whom.

In practice, LLMs make it possible to:

- Identify performance patterns that aren’t obvious at first glance

- Prioritise products based on context, profitability or potential demand

- Adjust recommendations based on real user intent

- Anticipate opportunities before they impact the business

This type of reasoning is possible thanks to advanced AI-powered catalogue analysis, which turns large volumes of data into actionable decisions and helps move from intuition to criteria

→ Related: AI-powered catalogue analysis

But for AI to reason, it first needs to understand. This is where catalogue optimisation comes into play: clear attributes, coherent structures and well-connected data. A well-optimised catalogue not only improves campaigns, but becomes the foundation on which AI builds more relevant and accurate recommendations

The result is smarter marketing: less reactive, more predictive and much more aligned with user intent. In this new scenario, visibility no longer depends solely on being present, but on fitting into AI’s reasoning when it has to decide which product, brand or option to recommend.

SEO, GEO and LLMs: the same story told in a different way

This is not about abandoning SEO or starting from scratch.

It’s about understanding that we are facing a natural evolution:

- The SEO builds the foundation

- The GEO decides who enters the answer

- Structured data sustains the entire system

In an ecosystem dominated by large language models, SEO, GEO and LLMs are not separate disciplines, but phases of the same evolution.

SEO remains the foundation that provides structure, authority and context, but it no longer competes only for rankings — it competes to be understandable and reusable by AI.

GEO expands this approach and focuses on how a brand is interpreted and cited in generative answers, where there are no result lists and no second chances: either you are part of the answer, or you simply don’t exist.

In this new scenario, LLMs act as intermediaries between brands and users, synthesising information, filtering options and building conclusions. This requires working with clear, consistent content supported by structured data and well-defined catalogues. It’s no longer just about being found, but about being understood, because visibility is no longer measured only in clicks, but in presence within the conversation that artificial intelligence maintains with the user.

The brands that adapt best are those that explain clearly what they do, structure their data properly and maintain a coherent narrative over time.

When visibility stops being a ranking and becomes an answer

The shift driven by large language models is not immediate or noisy, but it is profound. The way people discover, compare and understand brands is no longer happening exclusively through search engines and websites, but increasingly through AI-generated answers.

In this context, visibility no longer depends solely on appearing, but on being relevant, clear and consistent for AI. Brands that start today to work on content, data and narrative with this logic will not only adapt better to the new ecosystem, but will reach a key place sooner: being part of the answer when someone asks about their category.

![[Ebook] SEO + AI: eBook to Master AI Overviews and GEO](https://www.adsmurai.com/hubfs/MKT%20-%202025/WEB/Resources%20-%20Banners/HeaderEN_Ebook_SEO+AI.png)